Case Study: Two Tier Clos Fabric

The main steps in designing a network infrastructure are as follows:

- Use text and/or diagrams to create an

infrastructure description - Then

use the standardized schema to capture the infrastructure descriptionin a machine readable format by doing the following:- Add infrastructure

devicesubgraphs usingcomponentsandlinksto createedgesbetween components - Add infrastructure

instancesto define the number of devices in a reusable manner - Add infrastructure

linksto define additional information that exists between deviceinstancesin the infrastructure - Add infrastructure

edgesbetween deviceinstances

- Add infrastructure

Infrastructure Description

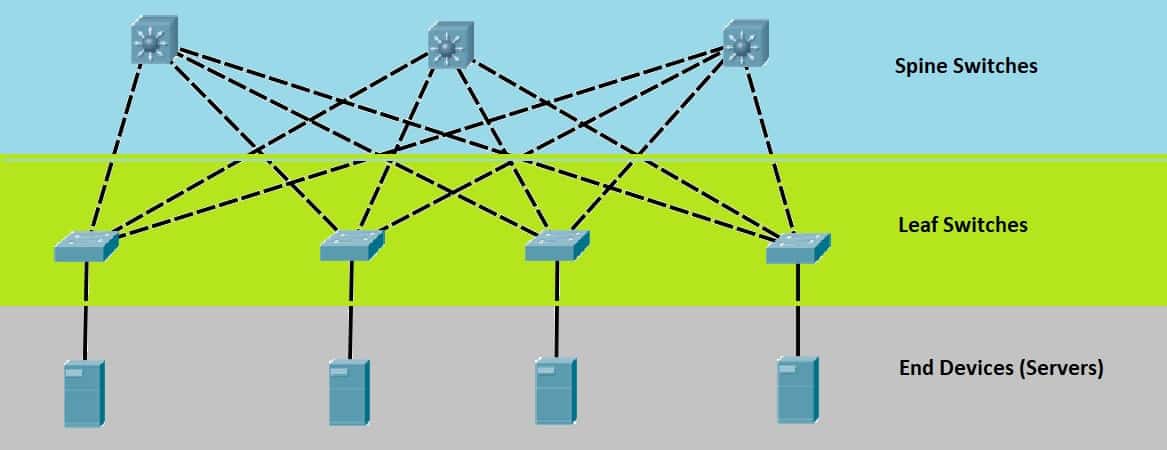

The following is a diagrammatic and textual description of a generic two tier clos fabric that will be modeled using the standardized schema.

It consists of the following devices:

- 4 generic

serverswith each server composed of 4 npus and 4 nics with each nic directly connected to one xpu via a pcie link. Also every xpu in a server is connected to every other xpu by an nvlink switch. In addition the server includes a management nic that is separate from test nics. - 4

leaf switchescomposed of one asic and 16 ethernet ports - 3

spine switchescomposed of one asic and 16 ethernet ports

The above devices will be interconnected in the following manner:

- each

leafswitch is connected directly to 1serverand to allspineswitches - every

serveris connected to aleafswitch at 100 gpbs - every

leafswitch is connected to everyspineswitch at 400 gpbs

Standardized Definitions

A standardized definition of the preceding two tier clos fabric can be created by following these steps:

- The device is a subgraph which is composed of two components connected to each other using a link.

- It acts as a blueprint allowing for a single definition to be reused multiple times for optimal space complexity.

Create a Server Device

Define a server device based on the infrastructure description.

Server device definition using OpenApiArt generated classes

from infragraph import *

# pyright: reportArgumentType=false

class Server(Device):

def __init__(self, npu_factor: int = 1):

"""Adds an InfraGraph device to infrastructure based on the following components:

- 1 cpu for every 2 npus

- 1 pcie switch for every 1 cpu

- X npus = npu_factor * 2

- 1 nic for every xpu with 2 nics connected to a pcie switch

- 1 nvswitch connected to all npus

"""

super(Device, self).__init__()

self.name = "server"

self.description = "A generic server with npu_factor * 4 xpu(s)"

cpu = self.components.add(

name="cpu",

description="Generic CPU",

count=npu_factor,

)

cpu.choice = Component.CPU

xpu = self.components.add(

name="xpu",

description="Generic GPU/XPU",

count=npu_factor * 2,

)

xpu.choice = Component.XPU

nvlsw = self.components.add(

name="nvlsw",

description="NVLink Switch",

count=1,

)

nvlsw.choice = Component.SWITCH

pciesw = self.components.add(

name="pciesw",

description="PCI Express Switch Gen 4",

count=npu_factor,

)

pciesw.choice = Component.SWITCH

nic = self.components.add(

name="nic",

description="Generic Nic",

count=npu_factor * 2,

)

nic.choice = Component.NIC

mgmt = self.components.add(

name="mgmt",

description="Mgmt Nic",

count=1,

)

mgmt.custom.type = "mgmt-nic"

cpu_fabric = self.links.add(name="fabric", description="CPU Fabric")

nvlink = self.links.add(name="nvlink")

pcie = self.links.add(name="pcie")

edge = self.edges.add(scheme=DeviceEdge.ONE2ONE, link=pcie.name)

edge.ep1.component = mgmt.name

edge.ep2.component = f"{cpu.name}[0]"

edge = self.edges.add(scheme=DeviceEdge.MANY2MANY, link=cpu_fabric.name)

edge.ep1.component = cpu.name

edge.ep2.component = cpu.name

edge = self.edges.add(scheme=DeviceEdge.MANY2MANY, link=nvlink.name)

edge.ep1.component = xpu.name

edge.ep2.component = nvlsw.name

for idx in range(pciesw.count):

edge = self.edges.add(scheme=DeviceEdge.MANY2MANY, link=pcie.name)

edge.ep1.component = f"{cpu.name}[{idx}]"

edge.ep2.component = f"{pciesw.name}[{idx}]"

npu_slices = [f"{idx}:{idx+2}" for idx in range(0, xpu.count, 2)]

for npu_idx, pciesw_idx in zip(npu_slices, range(pciesw.count)):

edge = self.edges.add(scheme=DeviceEdge.MANY2MANY, link=pcie.name)

edge.ep1.component = f"{xpu.name}[{npu_idx}]"

edge.ep2.component = f"{pciesw.name}[{pciesw_idx}]"

for nic_idx, pciesw_idx in zip(npu_slices, range(pciesw.count)):

edge = self.edges.add(scheme=DeviceEdge.MANY2MANY, link=pcie.name)

edge.ep1.component = f"{nic.name}[{nic_idx}]"

edge.ep2.component = f"{pciesw.name}[{pciesw_idx}]"

if __name__ == "__main__":

device = Server(npu_factor=2)

device.validate()

print(device.serialize(encoding=Device.YAML))

Server device definition as yaml

components:

- choice: cpu

count: 1

description: Generic CPU

name: cpu

- choice: xpu

count: 2

description: Generic GPU/XPU

name: xpu

- choice: switch

count: 1

description: NVLink Switch

name: nvlsw

- choice: switch

count: 1

description: PCI Express Switch Gen 4

name: pciesw

- choice: nic

count: 2

description: Generic Nic

name: nic

- choice: custom

count: 1

custom:

type: mgmt-nic

description: Mgmt Nic

name: mgmt

description: A generic server with npu_factor * 4 xpu(s)

edges:

- ep1:

component: mgmt

ep2:

component: cpu[0]

link: pcie

scheme: one2one

- ep1:

component: cpu

ep2:

component: cpu

link: fabric

scheme: many2many

- ep1:

component: xpu

ep2:

component: nvlsw

link: nvlink

scheme: many2many

- ep1:

component: cpu[0]

ep2:

component: pciesw[0]

link: pcie

scheme: many2many

- ep1:

component: xpu[0:2]

ep2:

component: pciesw[0]

link: pcie

scheme: many2many

- ep1:

component: nic[0:2]

ep2:

component: pciesw[0]

link: pcie

scheme: many2many

links:

- description: CPU Fabric

name: fabric

- name: nvlink

- name: pcie

name: server

Create a Switch Device

Define a switch device based on the infrastructure description.

Switch device definition using OpenApiArt generated classes

from infragraph import *

# pyright: reportArgumentType=false

class Switch(Device):

def __init__(self, port_count: int = 16):

"""Adds an InfraGraph device to infrastructure based on the following components:

- 1 generic asic

- nic_count number of ports

- integrated circuitry connecting ports to asic

"""

super(Device, self).__init__()

self.name = "switch"

self.description = "A generic switch"

asic = self.components.add(

name="asic",

description="Generic ASIC",

count=1,

)

asic.choice = Component.CPU

port = self.components.add(

name="port",

description="Generic port",

count=port_count,

)

port.choice = Component.PORT

ic = self.links.add(name="ic", description="Generic integrated circuitry")

edge = self.edges.add(scheme=DeviceEdge.MANY2MANY, link=ic.name)

edge.ep1.component = asic.name

edge.ep2.component = port.name

if __name__ == "__main__":

device = Switch()

print(device.serialize(encoding=Device.YAML))

Switch device definition as yaml

components:

- choice: cpu

count: 1

description: Generic ASIC

name: asic

- choice: port

count: 16

description: Generic port

name: port

description: A generic switch

edges:

- ep1:

component: asic

ep2:

component: port

link: ic

scheme: many2many

links:

- description: Generic integrated circuitry

name: ic

name: switch

Create an Infrastructure of Instances of devices, Links and Edges

Define an infrastructure based on the infrastructure description.

Two Tier Clos Fabric Infrastructure using OpenApiArt generated classes

from infragraph import *

from infragraph.blueprints.devices.generic.server import Server

from infragraph.blueprints.devices.generic.generic_switch import Switch

from infragraph.infragraph_service import InfraGraphService

class ClosFabric(Infrastructure):

"""Return a 2 tier clos fabric with the following characteristics:

- 4 generic servers

- each generic server with 2 npus and 2 nics

- 4 leaf switches each with 16 ports

- 3 spine switch each with 16 ports

- connectivity between servers and leaf switches is 100G

- connectivity between servers and spine switch is 400G

"""

def __init__(self):

super().__init__(name="closfabric", description="2 Tier Clos Fabric")

server = Server()

switch = Switch()

self.devices.append(server).append(switch)

hosts = self.instances.add(name="host", device=server.name, count=4)

leaf_switches = self.instances.add(name="leafsw", device=switch.name, count=4)

spine_switches = self.instances.add(name="spinesw", device=switch.name, count=3)

leaf_link = self.links.add(

name="leaf-link",

description="Link characteristics for connectivity between servers and leaf switches",

)

leaf_link.physical.bandwidth.gigabits_per_second = 100

spine_link = self.links.add(

name="spine-link",

description="Link characteristics for connectivity between leaf switches and spine switches",

)

spine_link.physical.bandwidth.gigabits_per_second = 400

host_component = InfraGraphService.get_component(server, Component.NIC)

switch_component = InfraGraphService.get_component(switch, Component.PORT)

# link each host to one leaf switch

for idx in range(hosts.count):

edge = self.edges.add(scheme=InfrastructureEdge.ONE2ONE, link=leaf_link.name)

edge.ep1.instance = f"{hosts.name}[{idx}]"

edge.ep1.component = host_component.name

edge.ep2.instance = f"{leaf_switches.name}[{idx}]"

edge.ep2.component = switch_component.name

# link every leaf switch to every spine switch

print()

for leaf_idx in range(leaf_switches.count):

for spine_idx in range(spine_switches.count):

edge = self.edges.add(scheme=InfrastructureEdge.ONE2ONE, link=spine_link.name)

edge.ep1.instance = f"{leaf_switches.name}[{leaf_idx}]"

edge.ep1.component = f"{switch_component.name}[{host_component.count + spine_idx}]"

edge.ep2.instance = f"{spine_switches.name}[{spine_idx}]"

edge.ep2.component = f"{switch_component.name}[{leaf_idx}]"

ClosFabric infrastructure definition as yaml

description: 2 Tier Clos Fabric

devices:

- components:

- choice: cpu

count: 1

description: Generic CPU

name: cpu

- choice: xpu

count: 2

description: Generic GPU/XPU

name: xpu

- choice: switch

count: 1

description: NVLink Switch

name: nvlsw

- choice: switch

count: 1

description: PCI Express Switch Gen 4

name: pciesw

- choice: nic

count: 2

description: Generic Nic

name: nic

- choice: custom

count: 1

custom:

type: mgmt-nic

description: Mgmt Nic

name: mgmt

description: A generic server with npu_factor * 4 xpu(s)

edges:

- ep1:

component: mgmt

ep2:

component: cpu[0]

link: pcie

scheme: one2one

- ep1:

component: cpu

ep2:

component: cpu

link: fabric

scheme: many2many

- ep1:

component: xpu

ep2:

component: nvlsw

link: nvlink

scheme: many2many

- ep1:

component: cpu[0]

ep2:

component: pciesw[0]

link: pcie

scheme: many2many

- ep1:

component: xpu[0:2]

ep2:

component: pciesw[0]

link: pcie

scheme: many2many

- ep1:

component: nic[0:2]

ep2:

component: pciesw[0]

link: pcie

scheme: many2many

links:

- description: CPU Fabric

name: fabric

- name: nvlink

- name: pcie

name: server

- components:

- choice: cpu

count: 1

description: Generic ASIC

name: asic

- choice: port

count: 16

description: Generic port

name: port

description: A generic switch

edges:

- ep1:

component: asic

ep2:

component: port

link: ic

scheme: many2many

links:

- description: Generic integrated circuitry

name: ic

name: switch

edges:

- ep1:

component: nic

instance: host[0]

ep2:

component: port

instance: leafsw[0]

link: leaf-link

scheme: one2one

- ep1:

component: nic

instance: host[1]

ep2:

component: port

instance: leafsw[1]

link: leaf-link

scheme: one2one

- ep1:

component: nic

instance: host[2]

ep2:

component: port

instance: leafsw[2]

link: leaf-link

scheme: one2one

- ep1:

component: nic

instance: host[3]

ep2:

component: port

instance: leafsw[3]

link: leaf-link

scheme: one2one

- ep1:

component: port[2]

instance: leafsw[0]

ep2:

component: port[0]

instance: spinesw[0]

link: spine-link

scheme: one2one

- ep1:

component: port[3]

instance: leafsw[0]

ep2:

component: port[0]

instance: spinesw[1]

link: spine-link

scheme: one2one

- ep1:

component: port[4]

instance: leafsw[0]

ep2:

component: port[0]

instance: spinesw[2]

link: spine-link

scheme: one2one

- ep1:

component: port[2]

instance: leafsw[1]

ep2:

component: port[1]

instance: spinesw[0]

link: spine-link

scheme: one2one

- ep1:

component: port[3]

instance: leafsw[1]

ep2:

component: port[1]

instance: spinesw[1]

link: spine-link

scheme: one2one

- ep1:

component: port[4]

instance: leafsw[1]

ep2:

component: port[1]

instance: spinesw[2]

link: spine-link

scheme: one2one

- ep1:

component: port[2]

instance: leafsw[2]

ep2:

component: port[2]

instance: spinesw[0]

link: spine-link

scheme: one2one

- ep1:

component: port[3]

instance: leafsw[2]

ep2:

component: port[2]

instance: spinesw[1]

link: spine-link

scheme: one2one

- ep1:

component: port[4]

instance: leafsw[2]

ep2:

component: port[2]

instance: spinesw[2]

link: spine-link

scheme: one2one

- ep1:

component: port[2]

instance: leafsw[3]

ep2:

component: port[3]

instance: spinesw[0]

link: spine-link

scheme: one2one

- ep1:

component: port[3]

instance: leafsw[3]

ep2:

component: port[3]

instance: spinesw[1]

link: spine-link

scheme: one2one

- ep1:

component: port[4]

instance: leafsw[3]

ep2:

component: port[3]

instance: spinesw[2]

link: spine-link

scheme: one2one

instances:

- count: 4

device: server

name: host

- count: 4

device: switch

name: leafsw

- count: 3

device: switch

name: spinesw

links:

- description: Link characteristics for connectivity between servers and leaf switches

name: leaf-link

physical:

bandwidth:

choice: gigabits_per_second

gigabits_per_second: 100

- description: Link characteristics for connectivity between leaf switches and spine

switches

name: spine-link

physical:

bandwidth:

choice: gigabits_per_second

gigabits_per_second: 400

name: closfabric